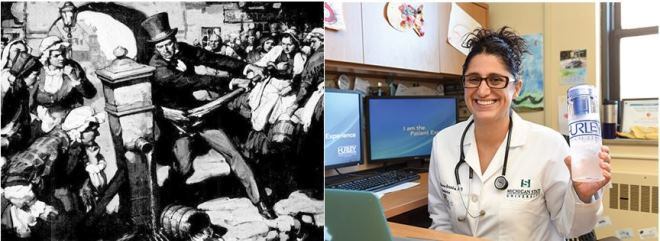

The bar at the mouth of the Columbia River creates a uniquely dangerous entrance to a major shipping route. Rapidly changing conditions there have sunk over 2000 large ships in the last 200 years. Local knowledge is essential to crossing the bar safely. Turn-by-turn directions from your phone are just no help there, especially when the seas are rough. As a freighter approaches from the Pacific, a Columbia River Bar Pilot comes on board to navigate through the ten-mile danger zone. The picture above shows a Columbia Bar pilot arriving by boat to take over the helm.

As researchers embedded in health systems, we should aspire to operate like river bar pilots. We have valuable local knowledge gained through years spent navigating our local waters. Researchers without that knowledge can be easily misled. Data systems, policies, and practice patterns all have many local variations. Embedded researchers know about the clinical, technical, and cultural micro-climates that influence the collection and recording of health system data. We know what research designs and intervention strategies are likely to stay afloat and which ones are certain to sink. Like the Columbia Bar pilots, we know where the hazards can hide and how to read the changing conditions. We should aim to share all of that local knowledge to facilitate public-domain research. As facilitators of a learning healthcare system, we help researchers from elsewhere navigate our local waters safely and efficiently.

There is an alternative scenario we should aspire to avoid. In that other scenario, embedded researchers would attempt to monetize or profit from health system data. The economists’ term for that practice is “rent-seeking”. It is not a term of endearment. It refers to the practice of charging as much as the market will bear for access to a resource the rent-seeker did not create and does not really own. Embedded researchers don’t own the data in health system records. Instead, researchers own their vital local knowledge and experience.

Columbia Bar pilots are paid reasonably well for their knowledge and experience. Coincidentally, the salary for an experienced Columbia Bar pilot is close to the NIH salary cap for investigators. Bar pilots do not charge rent to use the river. Instead, they charge for time spent helping others to navigate it. As you’d expect in Oregon, the fee that any ship pays to cross the Columbia Bar is set by the Public Utilities Commission. Large ships do have to pay that fee; they are not permitted to cross without a Bar Pilot on board. That’s not rent-seeking; it’s a safety precaution. Sinking your ship on the Columbia Bar creates a hazard for everyone.

Ships crossing the Columbia Bar also depend on significant infrastructure at the mouth of the river. Local people maintain the jetties, dredge the channel, and operate the lighthouses and radar stations. Some of that work belongs to the US Army Corps of Engineers, and some belongs to the US Coast Guard. All of those public services are paid for by our tax dollars. The public servants who maintain the infrastructure don’t blockade the river or charge whatever the traffic will bear.

When the salmon are running, the mouth of the Columbia is also a very popular fishing ground. Bar pilots’ deep knowledge of local conditions is useful for fishing as well as navigating. But anyone can fish, and the same catch limits apply to all. Bar pilots may know best where the salmon are running. But they can’t close the river or restrict access to their favorite fishing spots. That would make them river pirates rather than river pilots.

Greg Simon